On a sunny day (Sun, 10 Mar 2024 13:47:48 -0400) it happened legg

Post by leggPost by Jan PanteltjeOn a sunny day (Sat, 09 Mar 2024 20:59:19 -0500) it happened legg

Post by leggPost by Jan PanteltjeOn a sunny day (Thu, 07 Mar 2024 17:12:27 -0500) it happened legg

Post by leggA quick response from the ISP says they're blocking

the three hosts and 'monitoring the situatio'.

All the downloading was occuring between certain

hours of the day in sequence - first one host

between 11 and 12pm. one days rest, then the

second host at the same timeon the third day,

then the third host on the fourth day.

Same files 262 times each, 17Gb each.

Not normal web activity, as I know it.

RL

Many sites have a 'I m not a bot' sort of thing you have to go through to get access.

Any idea what's involved - preferably anything that doesn't

owe to Google?

...

I'd like to limit traffic data volume by any host to <500M,

or <50M in 24hrs. It's all ftp.

I no longer run an ftp server (for many years now),

the old one here needed a password.

Some parts of my website used to be password protected.

When I ask google for "how to add a captcha to your website"

https://www.oodlestechnologies.com/blogs/create-a-captcha-validation-in-html-and-javascript/

Maybe some html guru here nows?

That looks like it's good for accessing an html page.

So far the chinese are accessing the top level index, where

files are offered for download at a click.

Ideally, if they can't access the top level, a direct address

access to the files might be prevented?

What I am doing now is using a html://mywebsite/pub/ directory

with lots of files in it that I want to publish in for example this newsgroup,

I then just post a direct link to that file.

So it has no index file and no links to it from the main site.

It has many sub directories too.

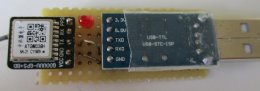

Loading Image...

https://panteltje.nl/pub/pwfax-0.1/README

So you need the exact link to access anything

fine for publishing here...

Maybe Usenet conversations are saved somewhere ? google still holds the archive?

I have most postings saved here on the Raspberry Pi4 8GB I am using for web browsing and Usenet

for what I found interesting back to 2006, older to back 1998 maybe on the old PC upstairs

raspberrypi: ~/.NewsFleX # l

total 692

-rw-r--r-- 1 root root 21971 Jan 9 2006 NewsFleX.xpm

-rw-r--r-- 1 root root 2576 Jul 30 2006 newsservers.dat.bak

drwxr-xr-x 5 root root 4096 Apr 1 2008 news.isu.edu.tw/

drwxr-xr-x 5 root root 4096 Apr 1 2008 textnews.news.cambrium.nl/

-rw-r--r-- 1 root root 1 Mar 5 2009 global_custom_head

drwx------ 4 root root 4096 Dec 6 2009 http/

-rw-r--r-- 1 root root 99 Apr 4 2010 signature.org

-rw-r--r-- 1 root root 8531 Apr 4 2010 signature~

-rw-r--r-- 1 root root 8531 Apr 4 2010 signature

-rw-r--r-- 1 root root 816 Nov 9 2011 filters.dat.OK

drwxr-xr-x 3 root root 4096 Jul 5 2012 nntp.ioe.org/

drwxr-xr-x 2 root root 4096 Mar 30 2015 news.altopia.com/

drwxr-xr-x 25 root root 4096 Mar 1 2020 news2.datemas.de/

drwxr-xr-x 109 root root 4096 Jun 1 2020 news.albasani.net/

drwxr-xr-x 2 root root 4096 Nov 28 2020 setup/

drwxr-xr-x 10 root root 4096 Mar 1 2021 news.ziggo.nl/

drwxr-xr-x 6 root root 4096 Jun 1 2021 news.chello.nl/

drwxr-xr-x 2 root root 4096 Aug 19 2021 news.neodome.net/

drwxr-xr-x 6 root root 4096 Sep 1 2022 news.tornevall.net/

drwxr-xr-x 156 root root 4096 Nov 1 2022 news.datemas.de/

drwxr-xr-x 23 root root 4096 Jan 1 2023 news.aioe.cjb.net/

drwxr-xr-x 4 root root 4096 Jan 1 2023 news.cambrium.nl/

drwxr-xr-x 52 root root 4096 Jan 1 2023 news.netfront.net/

drwxr-xr-x 60 root root 4096 Feb 1 2023 freenews.netfront.net/

-rw-r--r-- 1 root root 1651 Feb 1 2023 urls.dat~

drwxr-xr-x 49 root root 4096 Apr 2 2023 freetext.usenetserver.com/

-rw-r--r-- 1 root root 1698 Apr 18 2023 urls.dat

drwxr-xr-x 15 root root 4096 Aug 2 2023 localhost/

drwxr-xr-x 11 root root 4096 Dec 15 06:57 194.177.96.78/

drwxr-xr-x 190 root root 4096 Dec 15 06:58 nntp.aioe.org/

-rw-r--r-- 1 root root 1106 Feb 23 06:43 error_log.txt

-rw-r--r-- 1 root root 966 Feb 23 13:33 filters.dat~

-rw-r--r-- 1 root root 973 Mar 2 06:28 filters.dat

drwxr-xr-x 57 root root 4096 Mar 3 11:42 news.eternal-september.org/

drwxr-xr-x 14 root root 4096 Mar 3 11:42 news.solani.org/

drwxr-xr-x 197 root root 4096 Mar 3 11:42 postings/

-rw-r--r-- 1 root root 184263 Mar 6 04:45 newsservers.dat~

-rw-r--r-- 1 root root 2407 Mar 6 04:45 posting_periods.dat~

-rw-r--r-- 1 root root 0 Mar 6 06:27 lockfile

-rw-r--r-- 1 root root 87 Mar 6 06:27 kernel_version

-rw-r--r-- 1 root root 107930 Mar 6 06:27 fontlist.txt

-rw-r--r-- 1 root root 184263 Mar 6 06:27 newsservers.dat

-rw-r--r-- 1 root root 2407 Mar 6 06:27 posting_periods.dat

....

lots of newsservers came and went over time...

I have backups of my website on harddisk, optical and of course my hosting provider.